- Category

- War in Ukraine

AI Arms Race: Ukraine and Russia’s Use of Artificial Intelligence Is Changing the Rules of War

What role does AI have in war? In Russia’s war against Ukraine, it’s already shaping weapons, steering operations, spreading disinformation, and aiding post-war recovery efforts.

War in Ukraine has become a high-tech proving ground where AI is transforming the nature of war. Air superiority is no longer a guaranteed path to victory—a $400 AI-powered drone can destroy a $250 million bomber jet. But the implications go far beyond the front lines—AI is reshaping global security, political stability, and the integrity of information itself.

How is AI being used on the battlefield and beyond?

Ukraine’s AI deployment

Ukraine is rapidly deploying AI in both tactical and strategic domains on the battlefield. Main areas include:

Targeting and intelligence;

Command and control systems;

Autonomous drone warfare;

Strategic operations.

AI-powered platforms like Palantir provide real-time intelligence, integrating satellite and drone data to optimize targeting. The company’s software is responsible for the majority of targeting operations in Ukraine, said Palantir CEO Alex Karp. A situational awareness system built on the Palantir platform enables commanders to make faster, more informed decisions, Ukrainian Digital Transformation Minister Mykhailo Fedorov said.

Machine learning engineers are embedded in Ukrainian military units, indicating deep operational integration of AI at the tactical level. Ukraine has also launched cloud platforms like Delta and Kropyva, which collect and analyze massive volumes of battlefield intelligence. Delta aggregates real-time data from drones, satellites, and ground sensors, creating a unified command picture and significantly enhancing decision-making capabilities.

AI-powered drones such as the Bulava have demonstrated autonomous target acquisition and combat operations under electronic warfare conditions. Ukraine has also upped the game in drone warfare with its new “mother drones”—large unmanned platforms that launch strike FPV drones deep into enemy territory. Already battle-tested, these systems have proven their effectiveness.

One of the latest Ukraine’s developments is the Sky Sentinel turret—an AI-controlled automated air defense system capable of autonomously detecting, tracking, and firing at Russian drones.

In June 2025, Ukraine conducted Operation Spiderweb—a historic drone strike that damaged or destroyed 41 Russian aircraft, inflicting over $7 billion in losses. The drones used in this mission could switch to AI-driven autonomous control when the signal was lost, following pre-programmed routes and targeting aircraft using visual recognition algorithms. The operation showcased AI’s potential for delivering precision strikes and achieving strategic disruption.

Beyond the battlefield, artificial intelligence is powering Ukraine’s postwar recovery—from clearing mines to crafting next-gen prosthetics. Backed by a €700 million international funding package, Ukraine has rolled out AI-driven demining systems that scan satellite images and drone footage to spot unexploded ordnance and landmines. The technology slashes clearance time and minimizes risk, especially in farmland and residential areas.

On the rehabilitation front, Ukrainian-American startup Esper Bionics is breaking ground with AI-enabled prosthetics. Its flagship product, the Esper Hand, uses real-time feedback and machine learning to adjust grip patterns, giving users refined motor control for everything from daily chores to precision tasks.

Russia’s AI arsenal

Russia has responded with its own suite of AI systems. The V2U UAV, equipped with a propeller drive, uses computer vision to navigate and identify targets while avoiding GPS interference. Built with Chinese components and American AI processors, it underscores the global nature of AI supply chains for military use.

Another Russian development, the Lancet-3 attack drone, features an onboard AI module for object recognition and targeting. Although there have been reports of reliability issues with its autonomous guidance, the Lancet remains a mainstay in Russia’s drone arsenal.

Beyond the battlefield, AI is central to Russia’s propaganda machine. The Pravda Network—a massive disinformation ecosystem also known as “Portal Kombat”—comprises hundreds of fake news sites designed to mimic legitimate media outlets. These sites have published millions of articles sourced from pro-Kremlin content and translated into multiple languages using automated tools.

Their objectives include:

Polluting the online information space with coordinated, seemingly diverse but ideologically aligned content

Contaminating AI training datasets by seeding the internet with biased material

Laundering disinformation by having AI systems later reproduce this biased content as if it were neutral or fact-based.

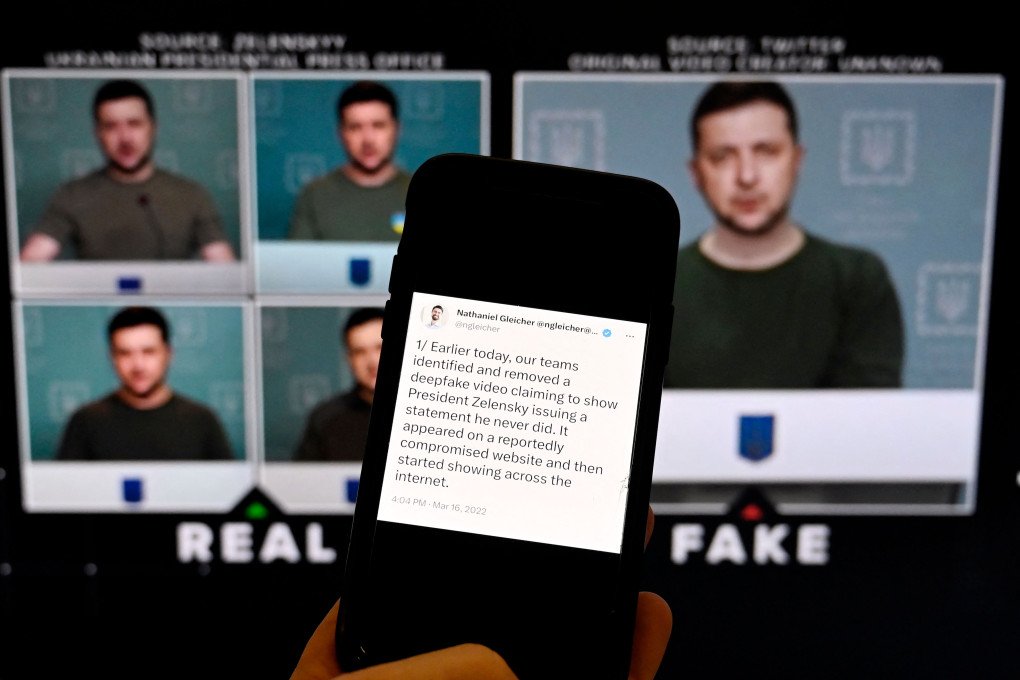

One prominent example of AI-driven disinformation was a deepfake video purporting to show Ukraine’s First Lady, Olena Zelenska, purchasing a $4.8 million Bugatti. The fabricated video featured a fake dealership employee and a forged invoice. Although Bugatti Paris and fact-checkers quickly debunked it, the clip went viral, garnering over 20 million views. This underscores how even poorly made deepfakes can achieve massive reach and sow public distrust.

Iran and North Korea are also actively using AI for malicious purposes. Iran has integrated AI into its cyber operations and regional influence campaigns, employing AI-generated fake media and personas to evade sanctions, coordinate attacks, and manipulate regional narratives. North Korea uses generative AI to create false identities for espionage, financial fraud, and infiltration efforts. These capabilities allow Pyongyang to generate revenue and gather intelligence.

Russia is weaponizing AI to enhance its disinformation war: spreading fake narratives faster, more convincingly, and at massive scale.

— UNITED24 Media (@United24media) May 29, 2025

This is what you need to know 👇

(1/15) pic.twitter.com/5SyCsm6oG1

AI as a weapon and a wedge

AI-powered weapons are altering the core dynamics of war. In Ukraine, artificial intelligence is no longer just a support tool. AI is central to battlefield operations, guiding precision strikes, accelerating command decisions, and shaping real-time situational awareness. At the same time, authoritarian regimes are using AI not for efficiency, but for control, deploying it to destabilize information environments and manipulate foreign perception.

Deepfakes have become a staple of psychological operations. A fake video of Ukraine’s first lady buying a $4.8 million Bugatti went viral despite being quickly debunked. The effectiveness of such fakes isn’t in their quality but in their timing; they exploit existing narratives and biases before truth can catch up.

The long-term concern is structural. By seeding the internet with false or distorted content, state actors can influence the behavior of large language models (LLMs). As AI increasingly shapes how people access and understand information, the integrity of its training data becomes a matter of strategic security.

What’s next?

As AI becomes more embedded in military systems, global attention is shifting toward regulation, safety, and transparency. Key priorities: securing training data, raising digital literacy, and improving accountability in generative models.

Western nations are beginning to act. Investments are being made in threat detection, cyber defense, and cross-border coordination. Governments are updating military doctrines, drafting legal frameworks to counter AI-driven disinformation, and launching public campaigns to raise awareness of deepfake technologies.

In Ukraine, development continues on AI-powered command systems, robotic combat platforms, and drones. The Brave1 technology platform supports cutting-edge defense startups and fosters an adaptive ecosystem for innovation in security and defense.

States like Russia, Iran, and North Korea are also expanding their AI arsenals for information warfare, espionage, and economic sabotage. Their evolving tactics are fueling a dynamic threat landscape that is increasingly difficult to monitor or predict.

Russia’s invasion of Ukraine has become the first true test case for AI in war. The focus is no longer just about military superiority. It is also about narrative control, data integrity, and digital influence.

-f223fd1ef983f71b86a8d8f52216a8b2.jpg)

-19428fefbe2e33044463541807f3be57.jpeg)

-554f0711f15a880af68b2550a739eee4.jpg)

-206008aed5f329e86c52788e3e423f23.jpg)

-1afe8933c743567b9dae4cc5225a73cb.png)

-46f6afa2f66d31ff3df8ea1a8f5524ec.jpg)